| Step Details | |

| Introduced in Version | 9.3.0 |

| Last Modified in Version | 9.13.0 |

| Step Location(s) | AI > Anthropic > Chat Completion AI > Google Gemini > Chat Completion AI > Common > Chat Completion AI > Open AI > Chat Completion |

The Chat Completion Step is used to generate a Chat Response and is available for use across several AI Modules. The step enables Users to input Model and AI Prompt information to output a Chat Response. To utilize this step, Users must have AI Common, and the desired AI Module installed, as these Modules will be used to populate the Chat Response. Since this step is available across several AI Modules, Users can quickly switch between models without the need for an additional step.

Prerequisites

- This step requires the AI Common Module to be installed before it will be available in the toolbox.

- Users must create a Project Dependency for the AI Common Module and any other AI Modules that will use the Chat Completion step.

- Users need to be on v9 to access this step.

Properties

Settings

| Property | Description |

|---|---|

| Override Default Provider | This setting is available when utilizing the step through AI Common. Checking this box enables Users to override the default provider (AI Common) and choose another AI Module, such as Google Gemini. |

| Override API Key (v9.13+) | Instead of using the key set at the System level on the module configuration, enabling this setting allows Users to add another key. |

| Get Provider From Flow | Enabling this setting allows the AI provider to be picked from Flow data, rather than hard coded at the Step level. This allows for dynamic provider shifting. |

| Select Model | This is a checkbox that allows you to select a custom Model for the step to use. |

| Model | This field is a drop-down menu that appears when Users click the Select Model check box. The drop-down menu will display a choice between available models depending on which module the step is pulled from. The step will search and display models depending on which AI Modules are installed in the Decisions environment. |

| Get Model From Flow | This setting will allow you to dynamically select a model for the step to use. By checking this you will allow the step to have a new string input called Model. |

| Select Prompt | By checking this checkbox you are choosing to select a predesigned Prompt as opposed to writing and managing your prompt here in the flow. |

| Get Prompt from Flow | This field will appear if a User clicks the Select Prompt checkbox. This enables Users to select a prompt using the Flow. |

| Use TextMerge Prompt | This field will appear if a User clicks the Select Prompt checkbox. Clicking the plus icon on the right-hand side of the drop-down menu populates a form to select a prompt built using the AI Prompt Manager. |

| Prompt | If Users do not check any of the above check boxes, a prompt can be manually entered in the Prompt field. |

Inputs

| Property | Description | Data Type |

|---|---|---|

| Override Variables | Users can Build an Array on this step to expose the Find and Replace fields. Users can then designate variables in both fields to see customized results in the Chat Response Output. | ListofAIPromptVariables |

Outputs

| Property | Description | Data Type |

|---|---|---|

| Chat Response | A list of AI Chat Completion utilizing the selected model, prompt, and override variables if applicable. | List of AIChatCompletion |

Common Errors

Using a Bad API Key

When utilizing the Chat Completion Step with the Open AI Module, the step will not return desired results unless the API Key is working properly. If an incorrect API Key is entered on the Open AI Settings Page, the Step will error out in the Debugger.

To correct this, ensure the API Key utilized is accurate and there are no issues with an associated Open AI account.

Exception Message:

Exception Stack Trace: DecisionsFramework.Design.Flow.ErrorRunningFlowStep: Error running step Chat Completion 2[CreateChatCompletionStep] in flow [Using an AI Prompt in a Flow]: HTTP 429 (insufficient_quota: insufficient_quota) You exceeded your current quota, please check your plan and billing details. For more information on this error, read the docs: https://platform.openai.com/docs/guides/error-codes/api-errors.

---> System.ClientModel.ClientResultException: HTTP 429 (insufficient_quota: insufficient_quota) You exceeded your current quota, please check your plan and billing details. For more information on this error, read the docs: https://platform.openai.com/docs/guides/error-codes/api-errors. at OpenAI.ClientPipelineExtensions.ProcessMessage(ClientPipeline pipeline, PipelineMessage message, RequestOptions options)

at OpenAI.Chat.ChatClient.CompleteChat(BinaryContent content, RequestOptions options)

at OpenAI.Chat.ChatClient.CompleteChat(IEnumerable`1 messages, ChatCompletionOptions options, CancellationToken cancellationToken)

at OpenAI.Chat.ChatClient.CompleteChat(ChatMessage[] messages)

at Decisions.AI.OpenAI.Steps.Completions.CreateChatCompletionStep.CreateChatCompletion(CreateChatCompletionRequest request)

at Decisions.AI.OpenAI.Steps.Completions.CreateChatCompletionStep.Run(StepStartData data)

at DecisionsFramework.Design.Flow.FlowStep.RunStepInternal(String flowTrackingID, String stepTrackingID, KeyValuePairDataStructure[] stepRunDataValues, AbstractFlowTrackingData trackingData)

at DecisionsFramework.Design.Flow.FlowStep.Start(String flowTrackingID, String stepTrackingID, FlowStateData data, AbstractFlowTrackingData trackingData, RunningStepData currentStepData)

--- End of inner exception stack trace ---Using the Chat Completion Step in a Flow

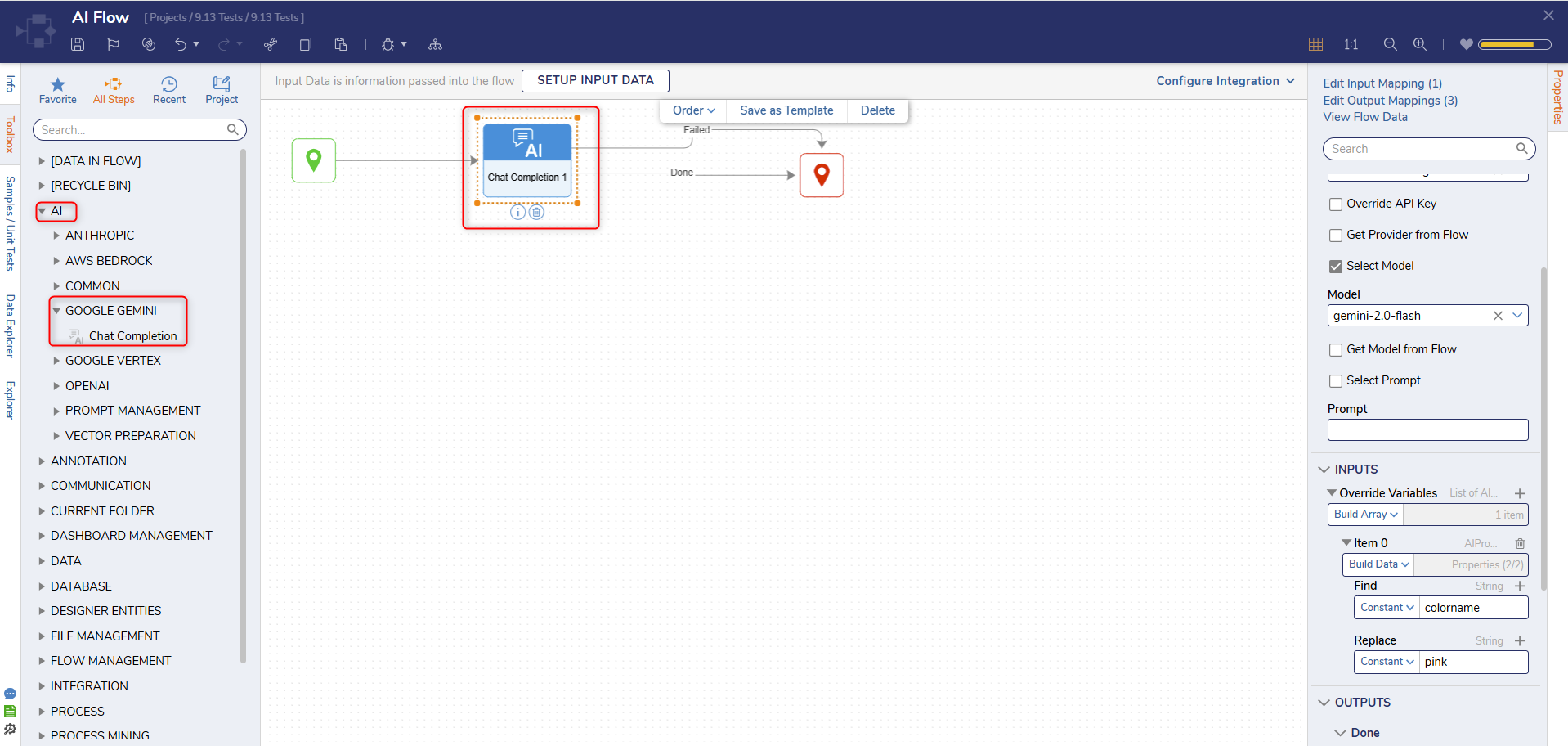

The following example demonstrates utilizing the Chat Completion step with a Model from Google Gemini. To set up the Step in Decisions:

- Drag the Chat Completion step onto the workspace and connect it to the Start and End steps.

- Navigate to the Properties Panel and customize the settings according to the desired Model. In this example, Gemini -2.0-flash was used as the model, and a prompt was selected from the AI Manager.

- For the Inputs, enter desired variables into the Find and Replace fields. Ensure these variables correspond to the chosen prompt.

After the desired settings and Inputs have been configured in the Properties Panel, select Debug. The Flow should generate a Chat Response that represents something similar to the screenshot below.

Step Changes

| Description | Version | Release Date | Developer Task |

|---|---|---|---|

| Added the Override API Key setting. | 9.13 | July 2025 | [DT-044658] |

.png)