Overview

Module Details | |

| Core or GitHub Module | Core |

| Restart Required | No |

| Step Location | AI > Google Gemini |

| Settings Location | Settings > Google Gemini Settings |

| Prerequisites |

|

The Google Gemini Module enables Users to access Google Gemini AI through the built-in Chat Completion step.

Once prerequisites are installed, the Module automatically exposes the Chat Completion step in the Toolbox. This step includes a properties panel with fields that can be configured according to user preference.

Google Gemini works directly with both the OpenAI and AI Anthropic Modules, allowing Users to switch between Modules efficiently without reformatting prompts or replacing Steps.

Configuration/Properties

Installation

- To set up the Module in Decisions, navigate to Settings > Administration > Features, locate the Google Gemini Module and select Install.

- The AI Common Module will automatically install in the background if this is the first AI Module installed in the environment.

Creating a Dependency:

- After installing the module, navigate to the project that will utilize Google Gemini.

- Then, within the Project, navigate to Manage > Configuration > Dependencies.

- Select Manage, navigate to Modules, and select Decisions.AI.GoogleGemini.

- Select Confirm to save the change.

For more information on installing Modules, please visit Installing Modules in Decisions.

For more information about creating a project dependency for a Module, please visit Adding Modules as a Project Dependency

Available Steps

The table below provides a list of all the steps within the module.

| Step Name | Description |

|---|---|

| Chat Completion | The Chat Completion step enables prompts to be submitted to a large language model(LLM) and will return the response the LLM gives back. Prompts can be added directly or pulled from the Flow. Users can also select the model they want to review the prompt. |

Available Models

As of v9.13, the Gemini Module supports Gemini 2.0 Flash, Gemini 2.5 Flash, and Gemini 2.5 Pro. As models are deprecated by Google, this will change. Version before v9.13 only uses models that have been deprecated.

Use Case: Utilizing Chat Completion Step with Google Gemini AI

To utilize Google Gemini's Chat Completion step in Decisions:

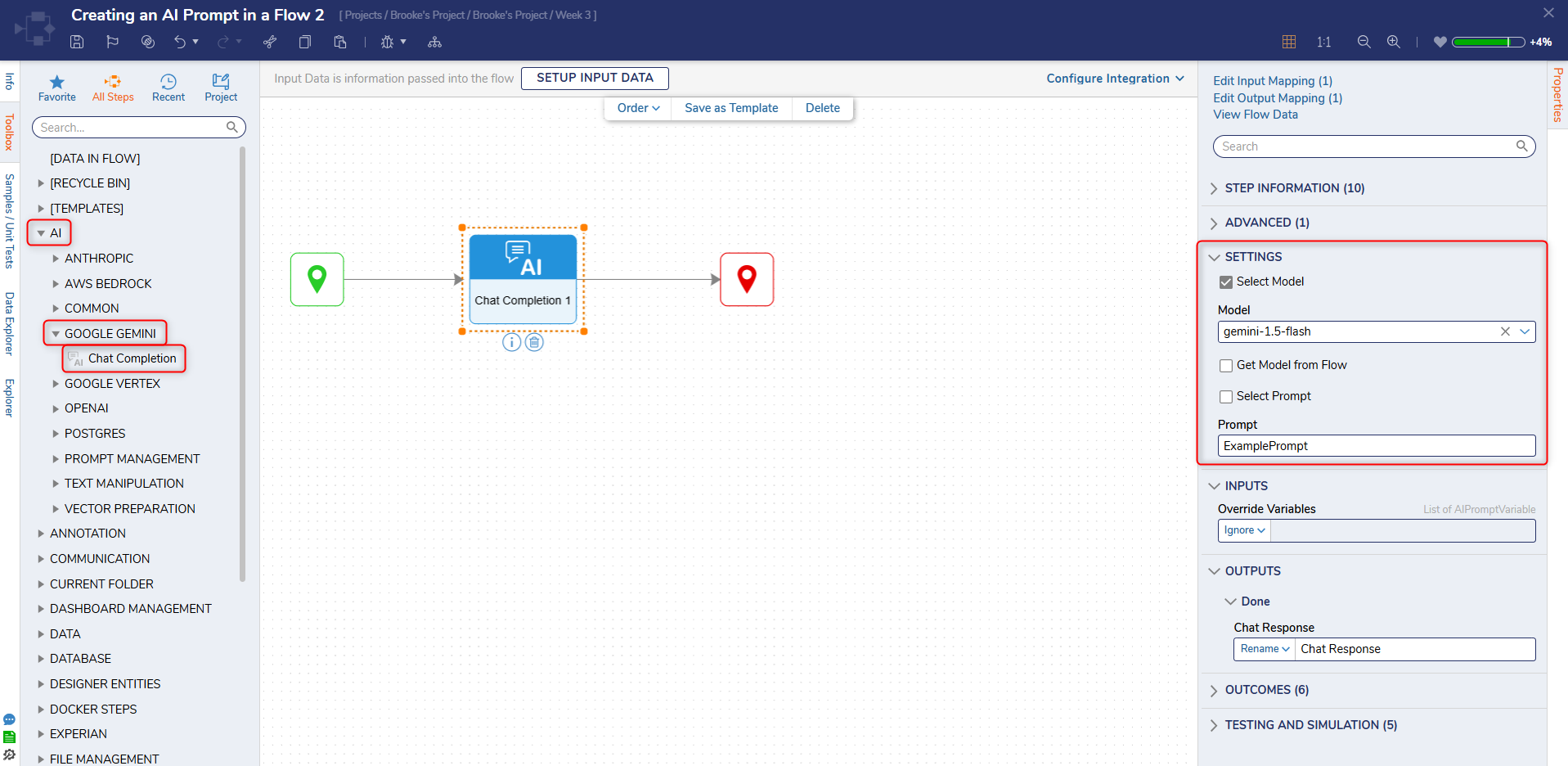

- Drag and drop the Chat Completion step onto the workspace and select the Step.

- Navigate to the Properties Panel on the right and check the Override Default Provider box.

- Select Decisions.AI.GoogleGemini from the drop-down menu.

- Next, check the Select Model box and choose the desired Google Gemini Model from the drop-down menu. Override and either create a constant prompt directly from a Flow or utilize a pre-constructed prompt made from the AI Prompt Manager.

- Users have two options when selecting an AI Prompt:

- To create a prompt within the Flow, check Get Prompt from Flow.

- To utilize a prompt created in the AI Prompt Manager, click Select Prompt.

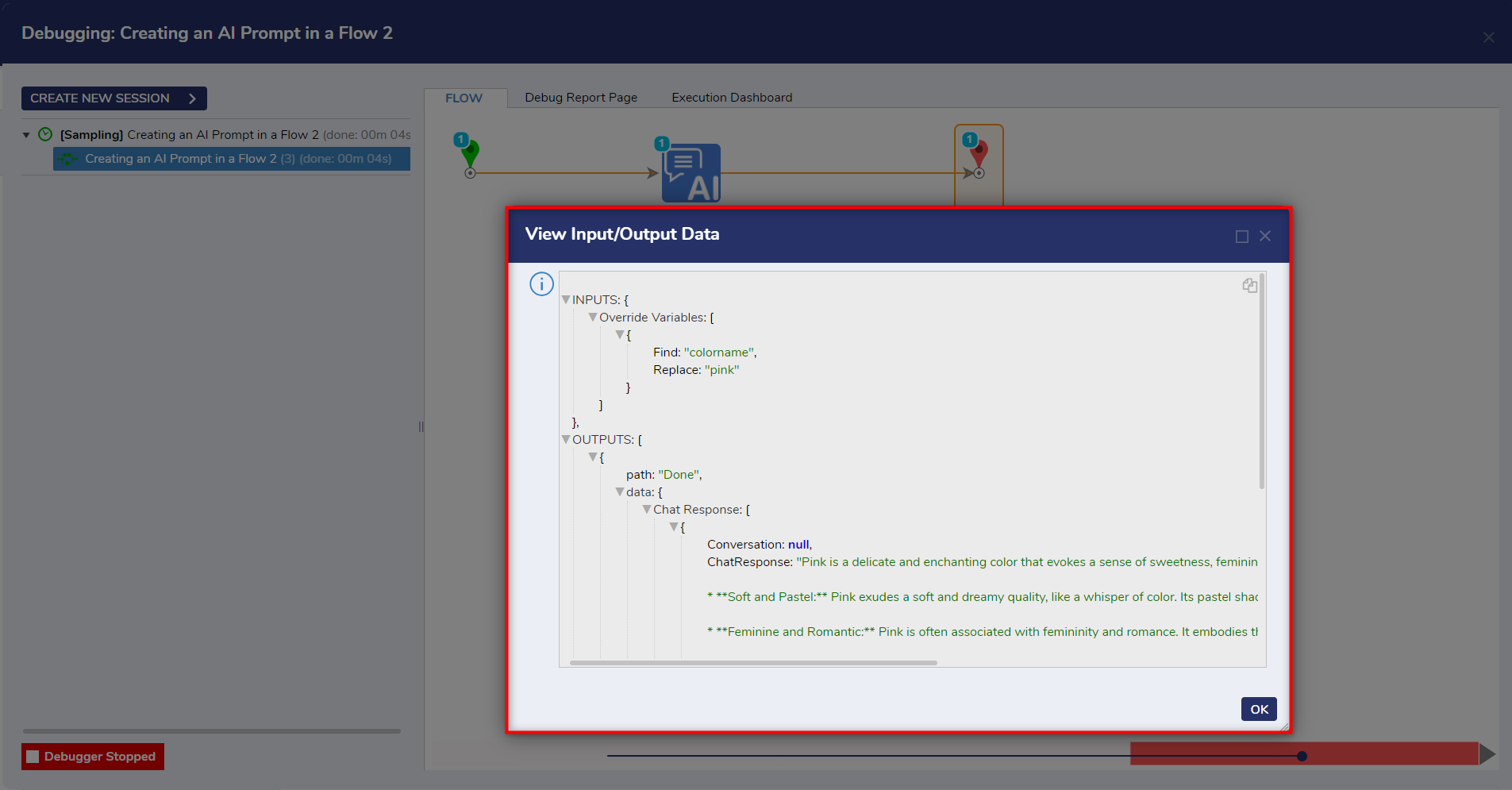

- To add Find and Replace Variables, select Build Array on Override Variables and Build Data on Item 0.

- Add desired variables in both the Find and Replace fields that correspond with the AI Prompt.

- Once the desired settings have been saved, Select Debug.

- The Debugger should display the Chat Response that includes settings customized in the Properties Panel.

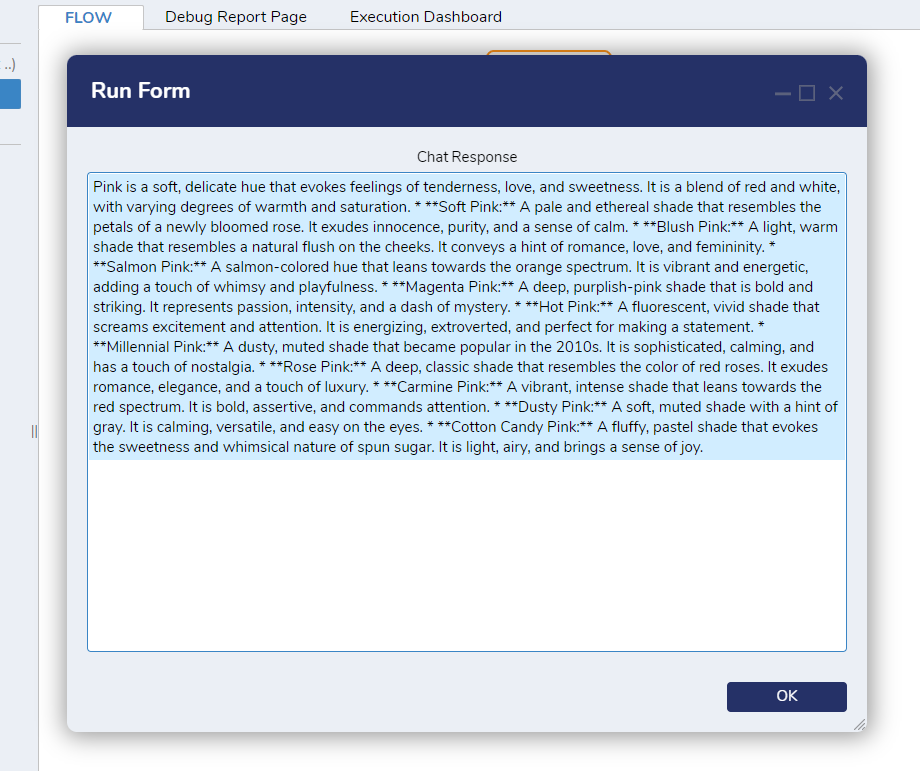

The example below is how a Chat Response would display on a Form when debugging.

Please visit Utilizing AI Modules in Decisions for more information on AI Modules.

Feature Changes

| Description | Version | Release Date | Developer Task |

|---|---|---|---|

| Supported models have changed. | 9.13 | July 2025 | [DT-044859] |