| Step Details | |

| Introduced in Version | 9.3 |

| Last Modified in Version | 9.15 |

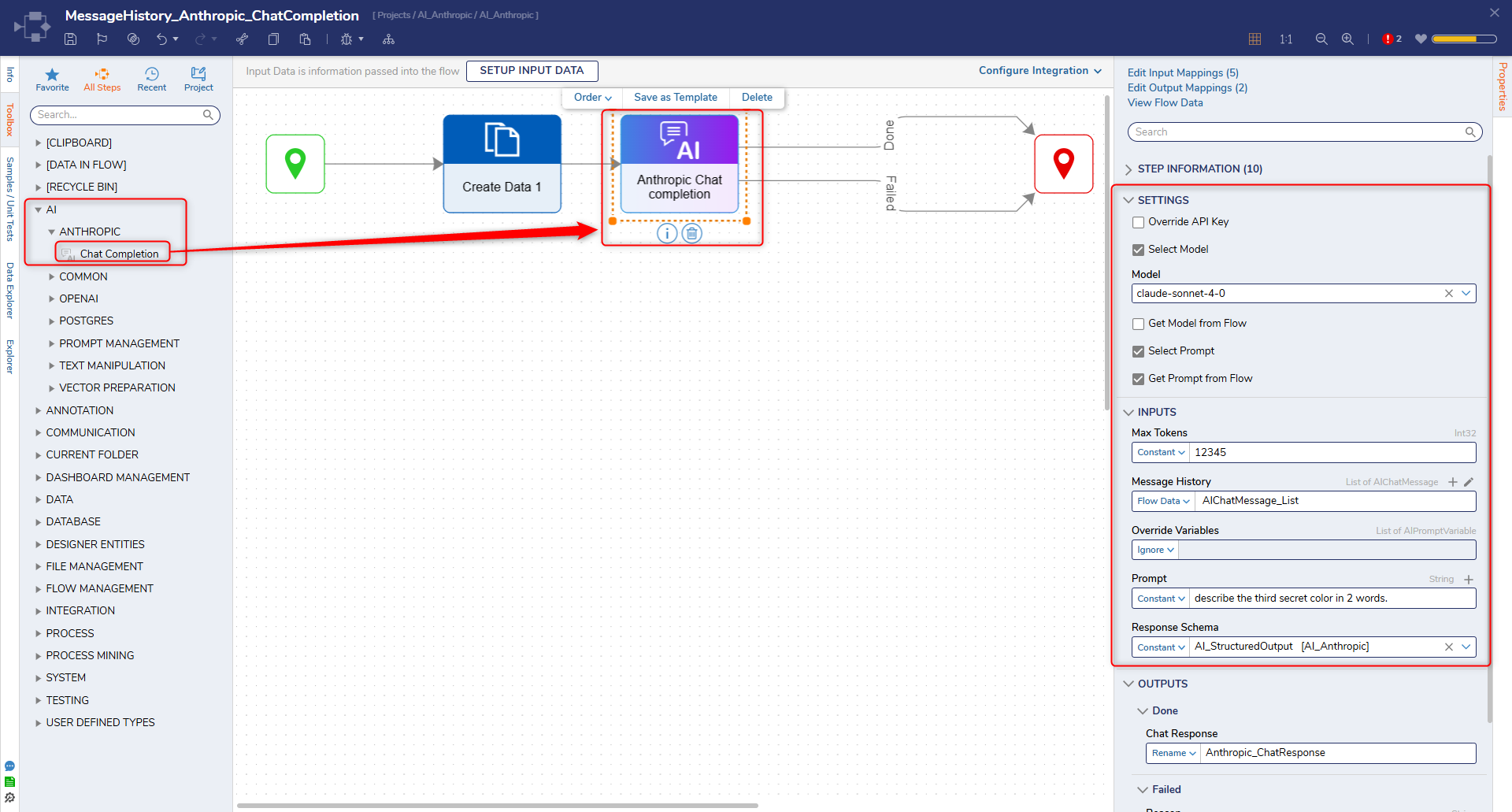

| Location | AI > Anthropic |

This step enables prompts to be submitted to Anthropic's large language model (LLM), which will return the response that the model provides. Users can either add prompts directly or pull them from the Flow. Additionally, users can select the specific model to review the prompt. This is crucial for automating processes that require language generation, such as creating chat responses, content generation, and other natural language processing tasks within the Flow.

Prerequisites

This step requires installing the AI Common and AI Anthropic modules and adding their dependencies to the Project before they are available in the toolbox. The Anthropic account should also be available, and the API key must be added in System > Settings> Anthropic AI Settings.

For more information on model status, please visit: https://docs.anthropic.com/en/docs/resources/model-deprecations#model-status.

Properties

Settings

| Property | Description | Data Type |

|---|---|---|

| Override API Key (v9.13+) | Instead of using the key set at the System level on the module configuration, enabling this setting allows Users to add another key. | |

| Select Model | Checkbox to enable the Model selection in settings. | Boolean |

| Model | List of Anthropic models available for use in the Flow. | --- |

| Get Model from Flow | Checkbox to enable the Model Name input property. Disables Select Model and Model settings when checked. | Boolean |

| Prompt | Input prompt for the LLM. | String |

| Select Prompt | Enables access to prompts stored in the AI Prompt Manager through the "Select Prompt" property. When checked, this disables the direct Prompt input option. | Boolean |

| Get Prompt from Flow | Checkbox to enable the Prompt input property. Disables Select Prompt when checked. | Boolean |

Inputs

| Property | Description | Data Type |

|---|---|---|

| Max Tokens | Maximum number of tokens allowed in the input string | Int32 |

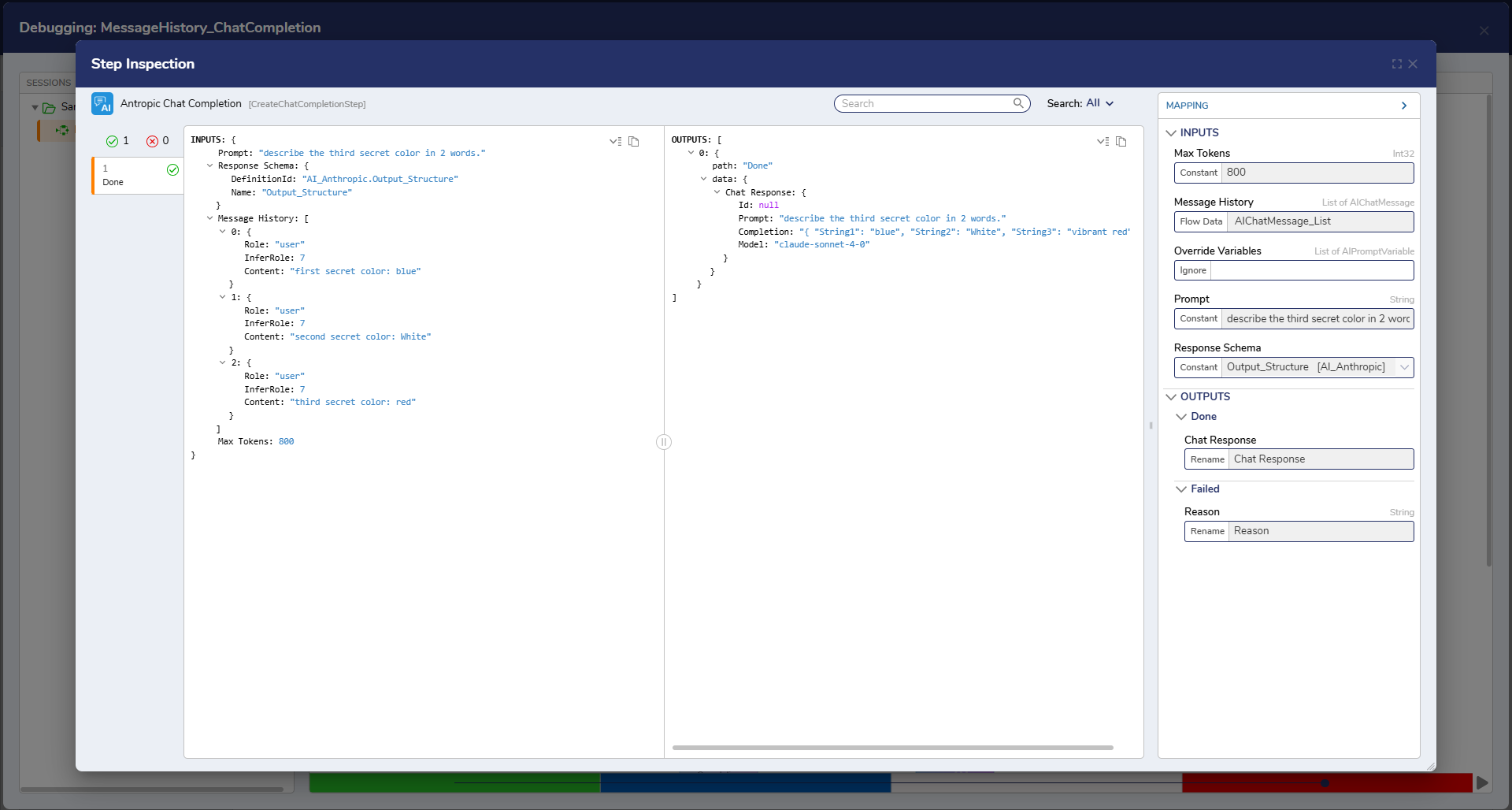

| Message History | Provides prior conversation context to help the LLM generate accurate and coherent responses. | String |

| Response Schema (v9.15+) | Defines the structured JSON format that the LLM must follow when generating its output. By specifying the expected fields and data types, the Response Schema ensures the model returns consistent, machine-readable data that can be accurately mapped into the workflow. | Any |

Outputs

| Property | Description | Data Type |

|---|---|---|

| Chat Response | Response from Anthropic's LLM based on the input prompt. | String |

About the Chat Response Structure:

The Chat Response returned from the Chat completion step consists of four components: ID, prompt, model, and completion. The completion component holds the model’s output and follows the structure defined by the Response Schema. When a schema, such as AI_StructuredOutput, is provided, the completion field returns a structured JSON object that matches the object defined by that schema.

For example:{ "StringValue": "Sky color", "StringValue2": "Ocean hue", "IntegerValue": 0, "IntegerValue2": 255, "BooleanValue": false, "BooleanValue2": true, "DecimalValue": 0.0, "DecimalValue2": 1.0 }

This ensures the output is predictable, machine-readable, and can be reliably passed to subsequent steps within the Flow.

Step Changes

| Description | Version | Release Date | Developer Task |

|---|---|---|---|

| Added new input 'Schema' to all AI Chat Completion Steps. | 9.15 | September 2025 | [DT-045124] |

| Added the Override API Key setting. | 9.13 | July 2025 | [DT-044658] |