Step Details

|

| Introduced in Version | Process Mining 3.2 |

| Last Modified in Version | Process Mining 3.2

|

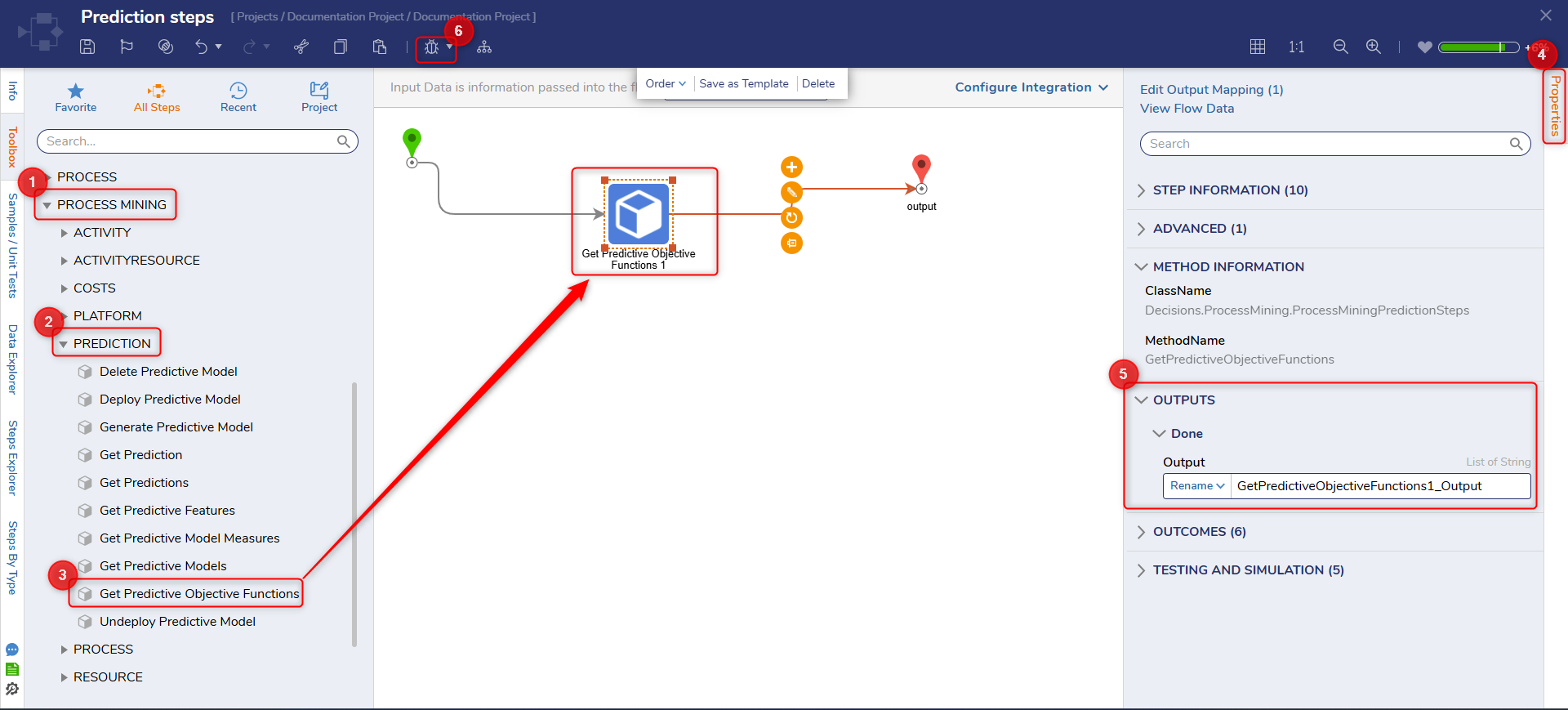

| Location | Process Mining > Predictions |

The Get Predictive Objective Functions step in the Decisions Flow toolbox lets users quickly retrieve a comprehensive set of performance metrics. Unlike some steps that require input, this step returns a standardized list of all available evaluation metrics

Properties

Outputs

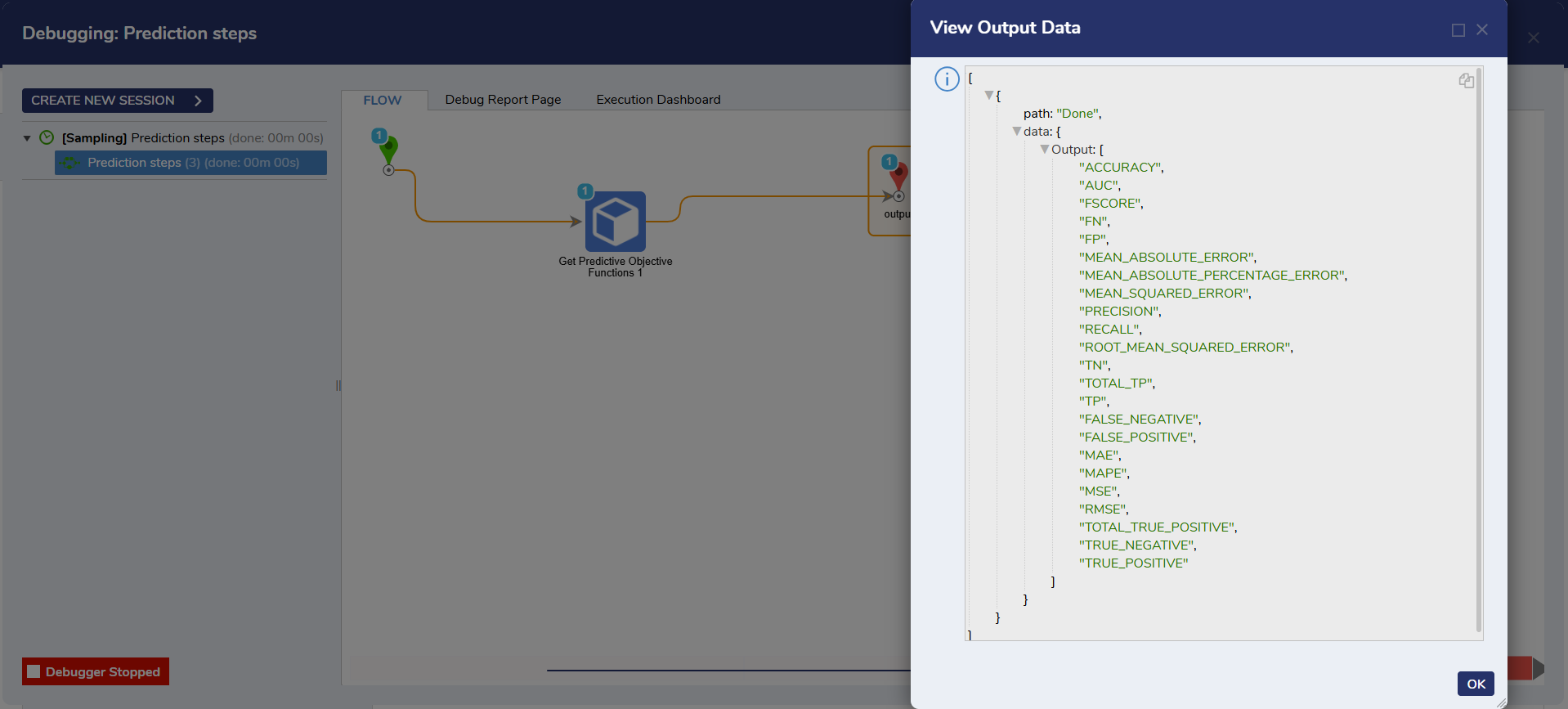

| Property | Description | Data Type |

|---|

| GetPredictiveModelMeasures_Output | A set of model evaluation metrics:- Accuracy: Proportion of total predictions that are correct.

- Precision: Proportion of positive identifications that were actually correct.

- Recall: Proportion of actual positives correctly identified

- F-Score: Harmonic mean of precision and recall.

- AUC: Area under the ROC curve.

- True Positive: Number of correctly predicted positive cases

- True Negative: Number of correctly predicted negative cases

- False Positive: Number of negative cases incorrectly predicted as positive

- False Negative: Number of positive cases incorrectly predicted as negative

- MEAN_ABSOLUTE_ERROR (MAE): Average absolute difference between predicted and actual values.

- MEAN_ABSOLUTE_PERCENTAGE_ERROR (MAPE): Average absolute percentage difference between predicted and actual values.

- MEAN_SQUARED_ERROR (MSE): Average squared difference between predicted and actual values.

- ROOT_MEAN_SQUARED_ERROR (RMSE): Square root of the average squared differences between predicted and actual values.

- TOTAL_TP: The total number of true positive predictions made by the model.

| -- |